Go C10k

Inspired by (admittedly „ancient“) part 1 of a very instructive post series by Richard Jones and while waiting for Channels API support in Google’s new Go App Engine, I decided to make a quick port of Richard’s mochiconntest_web Erlang module to Go.

Source first, discussion later:

package main

import (

"fmt"

"http"

"log"

"time"

)

func main() {

http.HandleFunc("/test/", feed)

if err := http.ListenAndServe("127.0.0.1:8080", nil); err != nil {

log.Fatal(err)

}

}

func feed(w http.ResponseWriter, r *http.Request) {

ch := time.Tick(1000000000)

fmt.Fprintf(w, "Goconntest welcomes you! Your Id: %s\n", r.URL.String())

w.(http.Flusher).Flush()

for N := 1; ; N++ {

<-ch

n, err := fmt.Fprintf(w, "Chunk %d for id %s\n", N, r.URL.String())

if err != nil {

log.Print(err)

break

} else if n == 0 {

log.Print("Nothing written")

break

}

w.(http.Flusher).Flush()

}

}

Of course this could be stripped down by ignoring (i.e. not handling) errors, but IMHO this doesn’t fall behind the Erlang implementation in terms of beauty; well, my sense of „beauty“ anyway.

You might have noted, that I’m not using the time.Sleep function, but instead a seemingly „artificial“ construct with a time.Ticker channel. This internally allows for a multiplexing of the Goroutines spawned by the http module to only a few system threads, scheduling the network operations via epoll.

Update 2011-05-23: Removed usage of ChunkedWriter from Go source according to a hint from Brad Fitzpatrick.

Update 2011-05-25: The flushing by w.(http.Flusher).Flush() is necessary to make sure that the chunks get written in-time. Thanks to Brad Fitzpatrick for pointing this out.

For the client side, I used Richard’s floodtest.erl module, only removing the {version, 1.1} line from the http:request, because that option doesn’t seem to be supported by Erlang R14B.

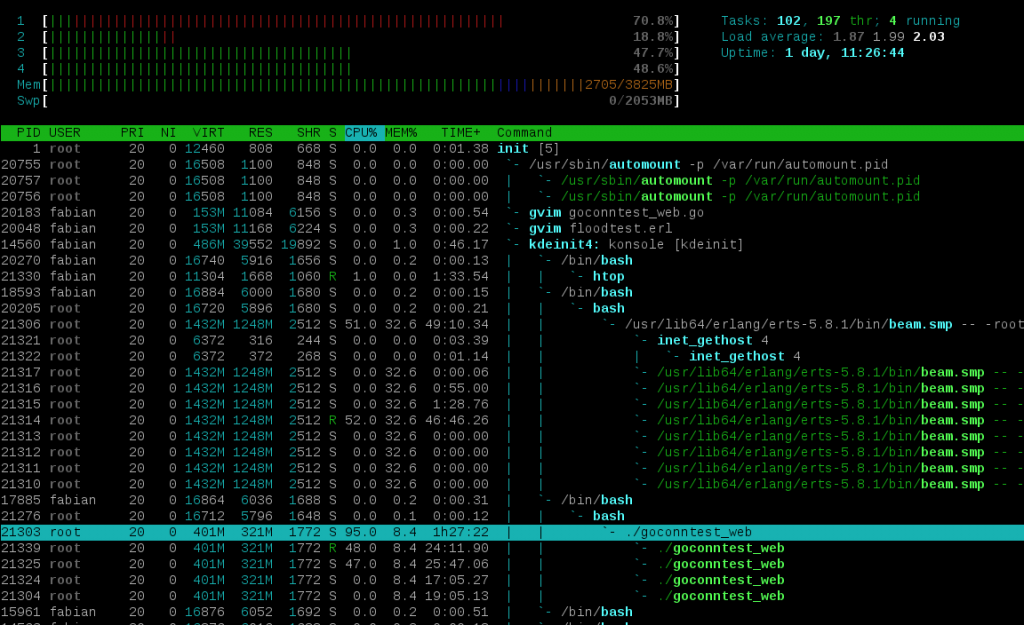

The result from my Intel(R) Core(TM) i7 CPU M 620 @ 2.67GHz:

The RSS usage of goconntest_web converges at 321MB i.e. roughly 32KB per connection (13KB less than the ad-hoc Erlang implementation). Also note, that Go indeed spawned only 4 threads on this quad-core machine to handle the 10k open connections.

The only disappointment was the rather high CPU usage. Although I’m aware that the screen-shot is far from any acceptable benchmark measure, still 1.5h CPU time seems to be more than „practically nothing“ Richard reported for the Erlang implementation.

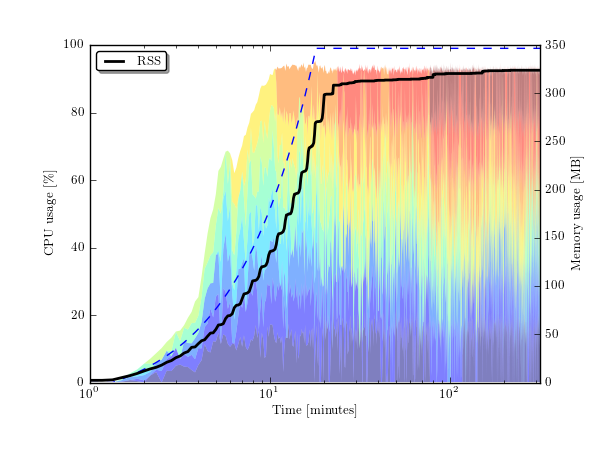

Update 2011-05-25: While testing the new code above, I logged the process statistics with pidstat and then made the following plot (Python script, data file):

Mind the logarithmic time scale! The dashed line indicates the ramp-up of opened connections to 10k (theoretical, not measured). The colorful background is a stacked plot of the per-thread CPU usage with one color per kernel thread-id. Obviously Go spawns more threads than there are cores. Finally, the thick black line is the resident memory usage, which seems to converge much later than the CPU usage.

Thanks for reading. Maybe, if the App Engine Channels API takes long enough, I will continue my investigations.